- Pip install apache spark install#

- Pip install apache spark drivers#

- Pip install apache spark code#

- Pip install apache spark professional#

- Pip install apache spark download#

However, its competitor Apache-MapReduce only uses Map and Reduce functions to provide analytics this analytical differentiation also indicates why spark outperforms MapReduce. Real Time Processing: Instead of processing stored data, users can get the processing of results by Real Time Processing of data and therefore it produces instant results.īetter Analytics: For analytics, Spark uses a variety of libraries to provide analytics like, Machine Learning Algorithms, SQL queries etc.

Pip install apache spark download#

Go to the below official download page of Apache Spark and choose the latest release.

Pip install apache spark code#

Clone Sedona GitHub source code and run the following command. This is the default distribution with Hive 2.3, and Hadoop 2. However, the PyPI users have only one single option.

Pip install apache spark install#

Open a command prompt and navigate to the folder containing get-pip.py. To install pyspark along with Sedona Python in one go, use the spark extra: pip install apache-sedona spark Installing from Sedona Python source. So when you download Apache Spark from official Apache Mirror, it gives you such many options. Multi Language Support: The multi-language feature of Apache-Spark allows the developers to build applications based on Java, Python, R and Scala. Download get-pip.py to a folder on your computer. Speed: As discussed above, it uses DAG scheduler (schedules the jobs and determines the suitable location for each task), Query execution and supportive libraries to perform any task effectively and rapidly. Here are some distinctive features that makes Apache-Spark a better choice than its competitors: Spark stores data Spark DataFrames for structured data, and in Resilient Distributed Datasets (RDD) for unstructured data. The Beam and Spark APIs are similar, so you already know the basic concepts.

Installation via pip install apache-airflow-backport-providers-apache-spark. If you already know Apache Spark, learning Apache Beam is familiar.

You can install this package on top of an existing airflow 1.10. Yes, Apache Spark Lets, download the latest available version of Apache Spark (. Now, it’s time to play with Big Data environment setup.

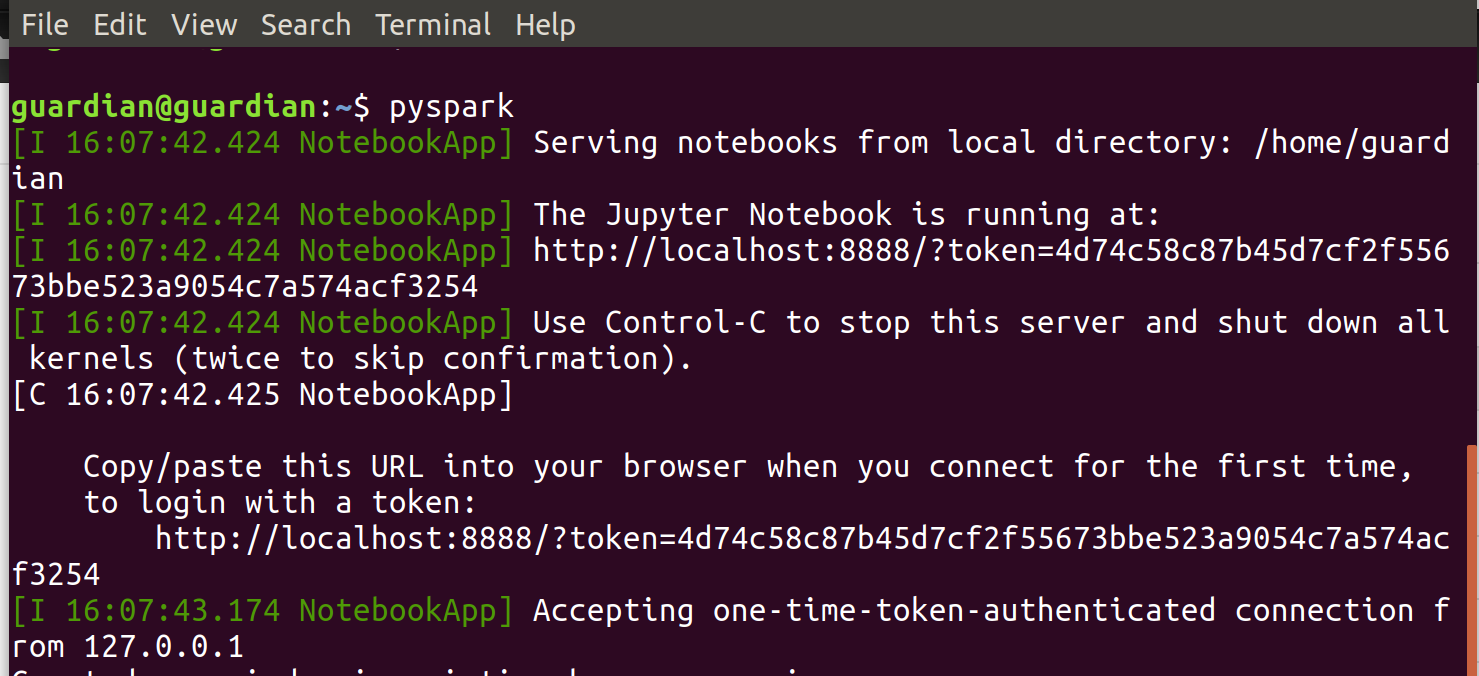

Use this below command and open Jupyter to check it’s working. Lastly, the built-in manager of Spark is responsible for launching any Spark application on the machines: Apache-Spark consists of a number of notable features that are necessary to discuss here to highlight the fact why they are used in large data processing? So, the features of Apache-Spark are described below: Features Use apache.spark provider without kubernetes (14187) Installation. sudo apt install python3-pip sudo apt install python3-notebook jupyter jupyter-core python-ipykernel.

Pip install apache spark drivers#

The executors are launched by “ Cluster Manager” and in some cases the drivers are also launched by this manager of Spark. And the third main component of Spark is “ Cluster Manager” as the name indicates it is a manager that manages executors and drivers. The Apache Spark works on master and slave phenomena following this pattern, a central coordinator in Spark is known as “ driver” (acts as a master) and its distributed workers are named as “executors” (acts as slave). The wide usage of Apache-Spark is because of its working mechanism that it follows: The data structure of Spark is based on RDD (acronym of Resilient Distributed Dataset) RDD consists of unchangeable distributed collection of objects these datasets may contain any type of objects related to Python, Java, Scala and can also contain the user defined classes. In order to install Airflow you need to either downgrade pip to version 20.2.4 pip install-upgrade pip20.2.4 or, in case you use Pip 20.3, you need to add option -use-deprecated legacy-resolver to your pip install.

The highlights of features include adaptive query execution, dynamic partition pruning, ANSI SQL compliance, significant. Spark uses DAG scheduler, memory caching and query execution to process the data as fast as possible and thus for large data handling. This resolver does not yet work with Apache Airflow and might lead to errors in installation - depends on your choice of extras. Spark 3.0.0 was release on 18th June 2020 with many new features. As the processing of large amounts of data needs fast processing, the processing machine/package must be efficient to do so. PySpark Cookbook: Over 60 Recipes for Implementing Big Data Processing and Analytics Using Apache Spark and Python Tomasz Drabas Denny Lee download Z-Library.

Pip install apache spark professional#

Apache-Spark is an open-source framework for big data processing, used by professional data scientists and engineers to perform actions on large amounts of data.

0 kommentar(er)

0 kommentar(er)